we’re entering an era of Mass Intelligence, where powerful AI is becoming as accessible as a Google search. (View Highlight)

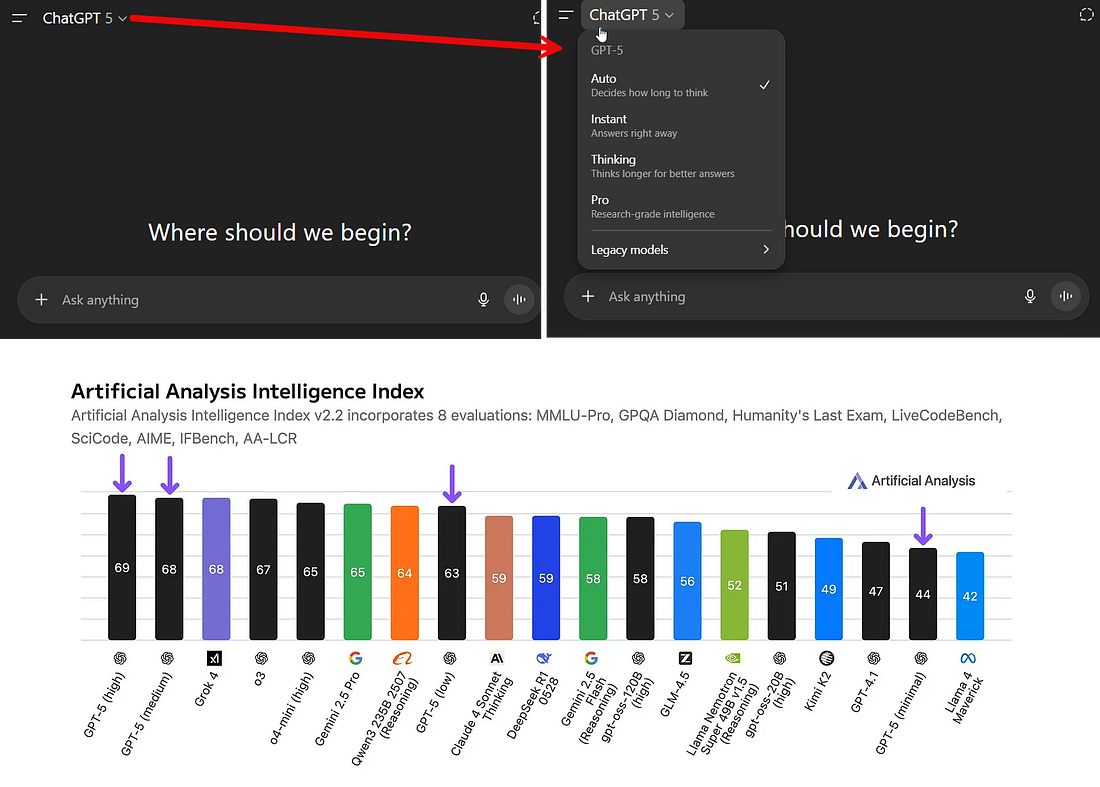

GPT-5 was supposed to solve both of these problems, which is partially why its debut was so messy and confusing. GPT-5 is actually two things. It was the overall name for a family of quite different models, from the weaker GPT-5 Nano to the powerful GPT-5 Pro. It was also the name given to the tool that picked which model to use and how much computing power the AI should use to solve your problem. When you are writing to “GPT-5” you are actually talking to a router that is supposed to automatically decide whether your problem can be solved by a smaller, faster model or needs to go to a more powerful Reasoner. (View Highlight)

This efficiency gain isn’t just financial, it’s also environmental. Google has reported that energy efficiency per prompt has improved by 33x in the last year alone. The marginal energy used by a standard prompt from a modern LLM in 2025 is relatively established at this point, from both independent tests and official announcements. It is roughly 0.0003 kWh, the same energy use as 8-10 seconds of streaming Netflix or the equivalent of a Google search in 2008 (interestingly, image creation seems to use a similar amount of energy as a text prompt) (View Highlight)

This is how a billion people suddenly get access to powerful AIs: not through some grand democratization initiative, but because the economics finally make it possible. (View Highlight)

When a billion people have access to advanced AI, we’ve entered what we might call the era of Mass Intelligence. Every institution we have — schools, hospitals, courts, companies, governments — was built for a world where intelligence was scarce and expensive. Now every profession, every institution, every community has to figure out how to thrive with Mass Intelligence. (View Highlight)

(

(